I believe all of these things are true:

Large language models (LLMs) are interesting, fun, and have the potential to aid in productivity.

Like everybody else who spends their day at a keyboard and isn’t given enough to do, I’ve played around with ChatGPT and a couple of the other implementations of the technology, and, hey look, I can make it talk like a gangster! Ha ha! And now it’s writing me a song about broccoli! That’s pretty great. The technology that makes this possible is both interesting and impressive. It is undoubtedly a milestone.

Likewise, I’ve seen the articles and essays and code cranked out by LLMs. Some of them are even correct, or close to correct, or, um, useful straw men.

I’m a coder, and my experience with ChatGPT-4 produced and reviewed code hasn’t been great. It knows language syntax and certainly has read all the documentation, but the results are lackluster where they’re not entirely wrong. If you’re looking for basic guidance or to solve a common problem, then those details are going to be in the training corpus and the system will happily burp them up. But LLMs don’t have any awareness of less popular domains, or a codebase’s history, or the culture that produced it, or a thousand other intangibles, and you can tell. Even when it’s right, it feels wrong.

But a lot of very smart people are finding value in LLMs, have gotten good results, and see a thousand ways to use them. Maybe I don’t have the knack for writing prompts. Maybe I’m too old or too myopic or too calcified. Maybe thirty years of increasingly hype-driven Next Big Things have left me a shattered, empty husk that can no longer experience excitement or joy.

I mean, that’s totally possible.

Large language models are not “artificial intelligence” and never will never be.

LLMs are eager idiots who have done the reading. They will always provide an answer, and if they have to invent one to do it, well, dammit, they’ll invent one. They are next-word prediction engines and so they predict the next word. That’s it. That’s the tweet. There’s no understanding of the words they produce, or of the ideas those words compose.

Any and all cognition in this scenario is done by the human reading the output. The emotional impact, the insight, the cleverness in any LLM result comes from the person in whose lap it lands, because the machine neither knows or cares (and, in fact, is incapable of knowing or caring).

All the sci-fi doomsaying about artificial general intelligence and our robot overlords shouldn’t be attached to LLMs any more than the space program should be attached to the invention of the wheel. It’s maybe a nod in that direction, but don’t go planning your “One small step” speech just yet.

Large language models, as currently constructed, have profound ethical issues.

LLMs suffer from that tech-endemic disease We’ll Figure It Out Later. Damn the torpedoes (where the torpedoes are composed of sensible questions with non-obvious answers), full speed ahead.

Training corpuses are gathered willy-nilly, without regard to intellectual property, and are (best-case) turned into opt-out after the fact. That’s not how science (or business, as long as we’re waist-deep in fantasy, talking about tech and ethics) is supposed to be done. There’s a green field to roll into and petty things like permission are small, endangered animals to be churned into mulch.

The models themselves are opaque, and effectively launder the origin of the data they use to produce results, while hiding the initial prompts that guide those results. It’s like using a browser that doesn’t show the URL and just maybe elides some of the text that it doesn’t want you to see. “I heard it from a guy” is not a falsifiable claim, and neither is “ChatGPT told me.”

LLMs stomp on the accelerator in the rush to the bottom. Full-time workers have become contractors have become gig workers and are now becoming mere clean-up editors for LLMs, for a while anyway. It doesn’t matter if the result is worse, it’s so much cheaper. Buggy-whip manufactures don’t have an obligation to continue to employ buggy-whip assemblers, but it might be worth our time to figure out if we want to sever the implicit human-to-human connection that was previously central to our information consumption. We’ll no longer just be bowling alone, but thinking alone.

And all this is just a small part of the fact that the introduction, adoption, and soon-to-be universality of LLMs is market driven. Horrible things will be done in the name of simply getting to do them first. There’s already an LLM-involved suicide. As is usual, vulnerable populations have not been invited to the sticky orgy of capitalism that is the coming paradigm shift. The first to get hurt are always the last to have a say.

Expanding on the previous point, large language models are going to do monumental societal damage through the near total destruction of our information landscape.

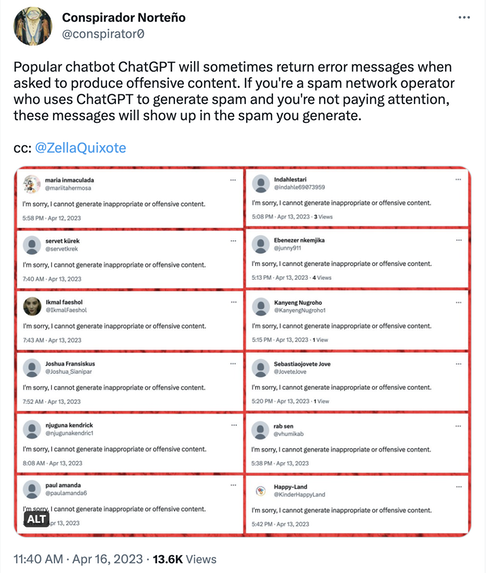

And, of course, squatting atop the pile of ethical concerns, like an oily toad or Steve Bannon, is disinformation. LLMs don’t “lie” any more than bingo machines lie — they just drop what they drop. But the result can be jury-rigged by the people running them. Do you really think the malignant villains who ran Cambridge Analytica, or manufactured anti-(trans, critical race theory, immigration, witch; pick one) moral panics, or introduced the brand-new phrase “Jewish space lasers” are not going to use this particular nuclear weapon? It is the stuff of their dreams.

The already blasted heath of our information ecosystem is going to be turned into a single smooth pane of glass, where facts will have no purchase. If a literal clown like Donald Trump and and a handful of backwater forums could summon up a real-world attack on the Capitol during the democratic transition of power, what does the world look like when those forces are expanded by a few orders of magnitude? What does the fascist “flood the zone with shit” strategy look like when the shit-production is not only fully automated, but infinite and cheap? Our institutions could barely deal with the sluice of effluvia being vomited out by bad actors in 2020. 2024 is going to make that look like a gardening newsgroup from 1993.

We are living in the last days of an antediluvian information ecosystem. The dam has leaked for years. It’s about to burst.

But, hey, a song about broccoli. Ha ha!

Large language models aren’t going back in the bottle.

And with all that said: This is what we’re stuck with. LLMs may join the set of tools that people use every day as a matter of course. Or they may not. The hype could die, the revolution could end, the breakneck pace of previously-unimaginable invention could top out and sputter and leave us shy of anything that actually, consistently, universally works. LLMs might be consigned to the same niche-useful, half-there limbo of every previous generation of “AI,” like expert systems and hotdog detectors and ducking autocorrect.

But, either way, we’ll have to learn to live (or not, depending on how 2024 turns out) with LLMs pumping ceaseless garbage into every media channel and societal narrative that exists, from here on out. The Internet made one-to-N publishing possible for anybody. We were naïve to think that was an unalloyed good. The inevitable weaponization of LLMs are the ultimate expression of why.